Table of Contents

- The 5-Microsecond Window That Separates Alpha from Adverse Selection

- Speed Is Fragmented: Why Venue Architecture Dictates Outcome

- CME Globex, Eurex T7, Nasdaq: Three Stacks, Three Optimization Calculi

- The Capital Cost of Undifferentiated Latency Monitoring

- The Venue-Latency Audit Framework: A CTO Diagnostic

- Conclusion: The Standard Has Already Been Set

The 5-Microsecond Window That Separates Alpha from Adverse Selection {#the-5-microsecond-window}

Aquilina, Budish, and O’Neill published their landmark analysis in the Quarterly Journal of Economics (2022) using microsecond-precision timestamps from the London Stock Exchange. Their finding deserves to sit on every Head of Trading’s desk: the modal duration of a latency arbitrage race — the window between capturing alpha and experiencing adverse selection — is 5 to 10 microseconds.

Not 5 milliseconds. Not 50 microseconds. Five to ten.

The median extends to 46 microseconds. The 90th percentile reaches roughly 200 microseconds. These races occur approximately once per minute, per symbol, for liquid instruments. Across FTSE 100 stocks, approximately 20% of total trading volume and 31% of all price impact is attributable to these races.

That 31% figure is the one most trading desks are not measuring. Conventional latency dashboards show round-trip times. They report p50, p99, and throughput. What they fail to surface is the portion of price impact generated by the latency arbitrage race dynamic — the moment your order arrives at a venue while another participant’s order has already moved the price against you. That loss does not appear as “latency.” It appears as “slippage.” And most P&L attribution systems file it under “market conditions.”

It is an infrastructure tax — quantifiable, addressable, and specific to each venue you trade.

The practitioners I work with and speak to regularly — CTOs and Heads of Electronic Trading at $500M+ AUM firms — face a structural diagnostic problem. They know their infrastructure underperforms. They see it in the P&L. What they consistently underestimate is how venue-specific the failure is. The latency problem at CME Globex is categorically different from the latency problem at Eurex T7 and different again from Nasdaq. Running a single optimization pass across your entire multi-venue stack will not close the gap. It will improve average numbers and leave the most expensive gaps untouched.

Speed Is Fragmented: Why Venue Architecture Dictates Outcome {#speed-is-fragmented}

I want to be precise here, because this is where generic latency advice breaks down.

“Low latency” as a concept has become nearly meaningless without a venue qualifier. A 60-microsecond round-trip to Eurex T7 (High Frequency session) is operating at the expected performance floor for that system. The same 60 microseconds at Nasdaq — where the matching engine’s fastest production implementation achieves 14 microseconds door-to-door and sub-40 microseconds is standard — represents significant underperformance that carries direct P&L consequences.

Three dimensions fragment the problem:

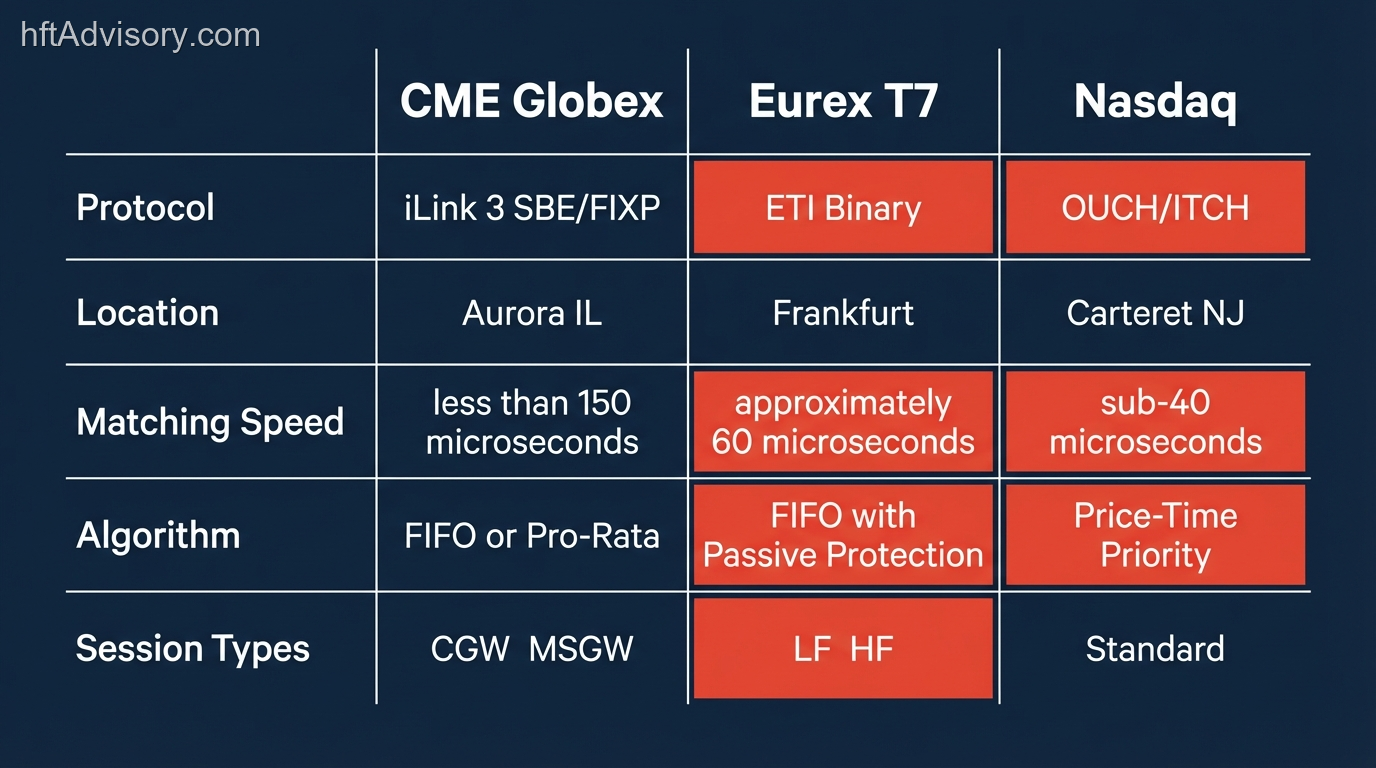

Protocol stack. CME Globex uses iLink 3, a binary protocol built on SBE (Simple Binary Encoding) and FIX Performance (FIXP). Eurex T7 uses its proprietary ETI protocol over FIX V5.0 SP2. Nasdaq uses OUCH for order submission and ITCH for market data. Each protocol has distinct message structures, session state management, and recovery behavior. The throughput and jitter characteristics of each differ at the implementation level — not just at the network level. A system optimized for iLink 3 binary parsing has not been optimized for ETI. These are distinct engineering problems.

Geographic colocation. CME Globex matching runs in Aurora, Illinois — Equinix CH1, CH2, and CH4. Eurex T7 runs in Frankfurt. Nasdaq’s primary matching for US equities runs in Carteret, New Jersey — Equinix NY5. Physical distance between colocation facilities and matching engine hardware is not eliminable by software. Speed-of-light constraints create a latency floor for cross-venue strategies that must be explicitly modeled, not hoped away.

Matching algorithm. CME uses FIFO or pro-rata matching depending on the instrument — marked with a “Q” (FIFO) or “F” (pro-rata) designation in the contract specifications. Queue position priority under FIFO means that queue management is as important as raw speed. Eurex T7 uses FIFO with passive liquidity protection introduced in 2024 for options, which changes the optimization calculus for options versus futures on the same exchange. Nasdaq uses strict price-time priority. These are not equivalent execution environments. A strategy tuned for queue priority under CME FIFO will behave differently when ported to a pro-rata instrument on the same exchange, let alone cross-venue.

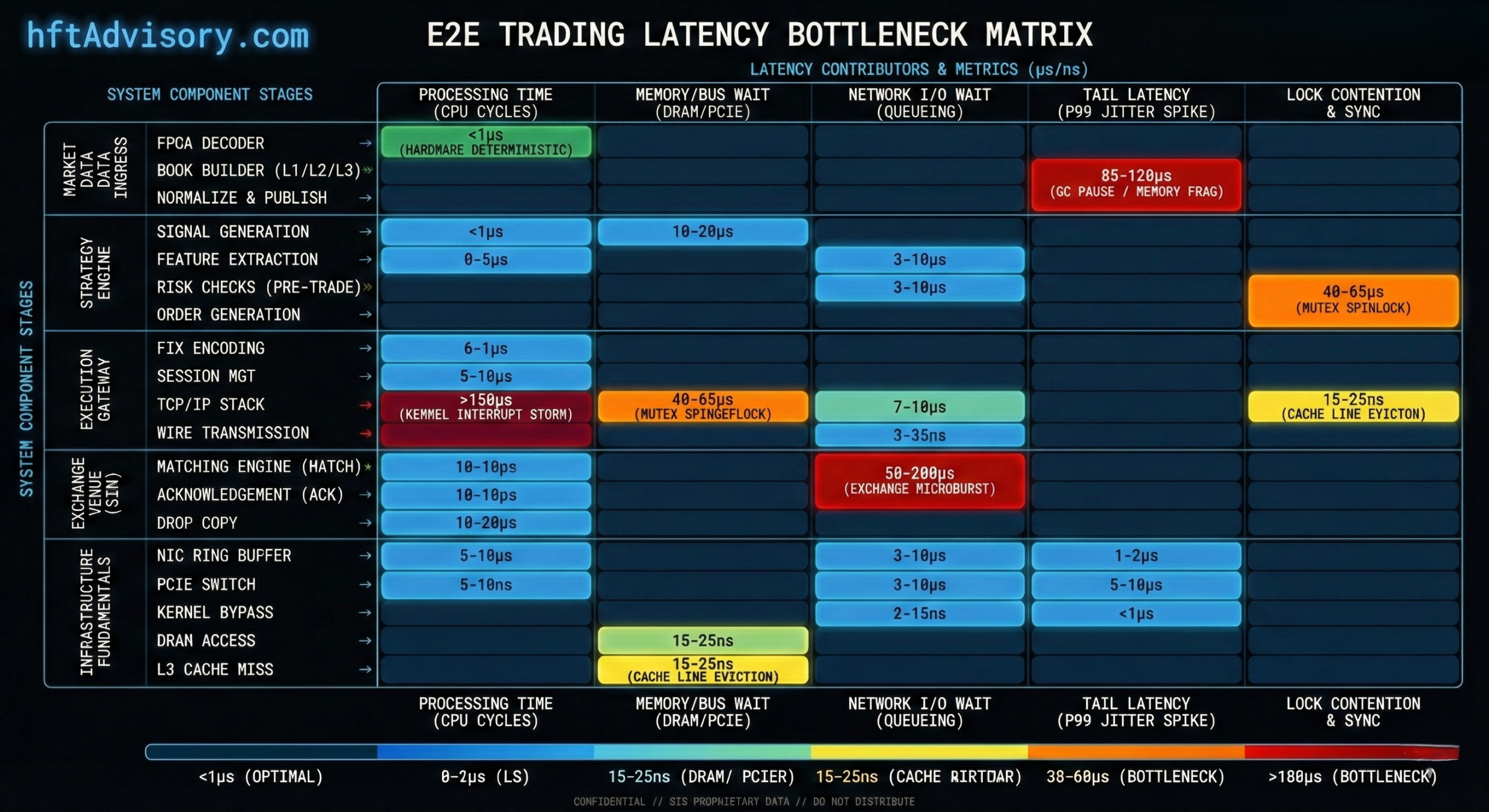

The compounding problem is that most latency optimization programs I have observed treat the trading stack as a single entity to be tuned. The reality is a matrix. Each cell in that matrix — venue, asset class, session type, protocol — has a different failure mode and a different optimization lever.

CME Globex, Eurex T7, Nasdaq: Three Stacks, Three Optimization Calculi {#three-stacks}

CME Globex: Protocol Migration Risk and the Infrastructure Snapshot

As of December 31, 2024, CME completed the mandatory migration from iLink 2 to iLink 3. Any firm that had not completed that migration was on deprecated infrastructure as of Q1 2025. The move from FIX-based to SBE/FIXP binary is not cosmetic — binary encoding reduces message size and parsing latency at the wire level. But it also introduces new session management requirements, heartbeat logic, and recovery protocol differences that must be handled correctly under stress.

The CME matching engine processes orders in less than 150 microseconds. The port selection also carries a direct cost implication: a 10G port runs approximately $12,000 per month, versus $350-$550 per month for standard cross-connects. The 20x-40x premium on the fastest access tier is a capital allocation decision — one that only makes economic sense if the rest of the stack can take advantage of the bandwidth without introducing jitter elsewhere.

In June 2024, CME announced a migration of Globex to a new private Google Cloud region and colocation facility in Aurora, Illinois, with a target completion date of 2028. Eighteen-month client notice has been issued. The stated commitment is equal network latency to markets whether firms choose self-managed or IaaS options. The infrastructure teams at major desks are already modeling the migration path. The firms that wait for 2027 to start that work will compress the transition into a period of maximum market volatility risk.

Eurex T7: Passive Liquidity Protection and the Options/Futures Divergence

Eurex reported over 1.2 billion contracts traded in H1 2025. The T7 system’s median round-trip for the High Frequency session is approximately 60 microseconds. For firms running futures strategies on Eurex, the optimization environment is relatively stable.

For options on Eurex, the environment changed materially in 2024. Eurex introduced passive liquidity protection specifically to prevent sniping by the fastest participants — a structural intervention that changes the payoff to latency optimization in the options market. A strategy that depended on speed advantage against resting liquidity in Eurex options is operating in a different regime post-2024.

The instrument-specific nature of this change is precisely the problem with generic optimization. A desk running both Eurex futures and Eurex options under a unified latency monitoring framework may see average metrics that look acceptable while the options book experiences systematic adverse selection that the aggregated report masks.

Nasdaq: Feed Differential and the A/B Side Problem

Nasdaq’s matching engine achieves sub-40 microsecond latency as standard, with some production implementations reported at under 15 microseconds door-to-door. The exchange operates in over 70 markets globally. But the data feed architecture introduces a latency asymmetry that matters at HFT speeds.

The Nasdaq TotalView-ITCH feed has an A-side and B-side implementation. The B-side trails the A-side by approximately 500 microseconds or more. In standard latency reporting, this differential is often invisible — it appears as network variability rather than a systematic bias. At HFT execution speeds, 500 microseconds on the data feed is not a rounding error. It is a systematic information disadvantage that affects quote update processing and adverse selection exposure.

The question of which firms are operating on the A-side versus the B-side, and whether their order management systems are correctly accounting for the feed differential in their market data pipeline, is one that deserves explicit audit rather than assumption.

The Capital Cost of Undifferentiated Latency Monitoring {#capital-cost}

The Knight Capital Group failure on August 1, 2012 remains the clearest case study in what happens when infrastructure inconsistency is undetected across a multi-server deployment. A technician failed to deploy new code to one of eight SMARS servers. The eighth server’s dormant Power Peg code — written in 2003, reactivated by a repurposed flag bit — began executing live orders. In 45 minutes, Knight lost $440 million.

The technical root cause was not slow execution. The root cause was an assumption of consistency across an infrastructure stack that was, in that moment, inconsistent. The monitoring systems reported “normal” because the seven servers behaving correctly outnumbered the one that was not.

The pattern generalizes. Standard latency reports aggregate across venues, sessions, and instruments. They surface average behavior. A single venue with systematically elevated adverse selection does not necessarily move the aggregate number enough to trigger a review. The loss shows up in P&L, attributed to “market conditions” or “unfavorable fills,” and the engineering team spends the next quarter optimizing average latency by 2 microseconds on the already-healthy venues.

The 2010 Flash Crash research (Kirilenko et al.) documented another dimension of this problem: HFT behavior changes dramatically during stress events. Firms that were providing liquidity withdrew it and submitted aggressive orders, consuming more liquidity than they provided during the period when their models were most uncertain. The VPIN (Volume-Synchronized Probability of Informed Trading) measure reached unprecedented levels in the intervals preceding the crash, faster than market makers could adjust their quotes.

The operational implication: a latency audit conducted under normal market conditions tells you how your infrastructure performs when nothing is broken. It does not tell you how it performs under the conditions where performance matters most. Venue-specific stress testing — calibrated to the specific matching algorithm, session type, and protocol of each exchange — is a different exercise from a generic load test.

In my experience advising trading desks, the most expensive latency problems are the ones that pass the standard report with acceptable numbers. They are invisible precisely because the monitoring architecture treats the stack as homogeneous when the market structure does not.

The Venue-Latency Audit Framework: A CTO Diagnostic {#audit-framework}

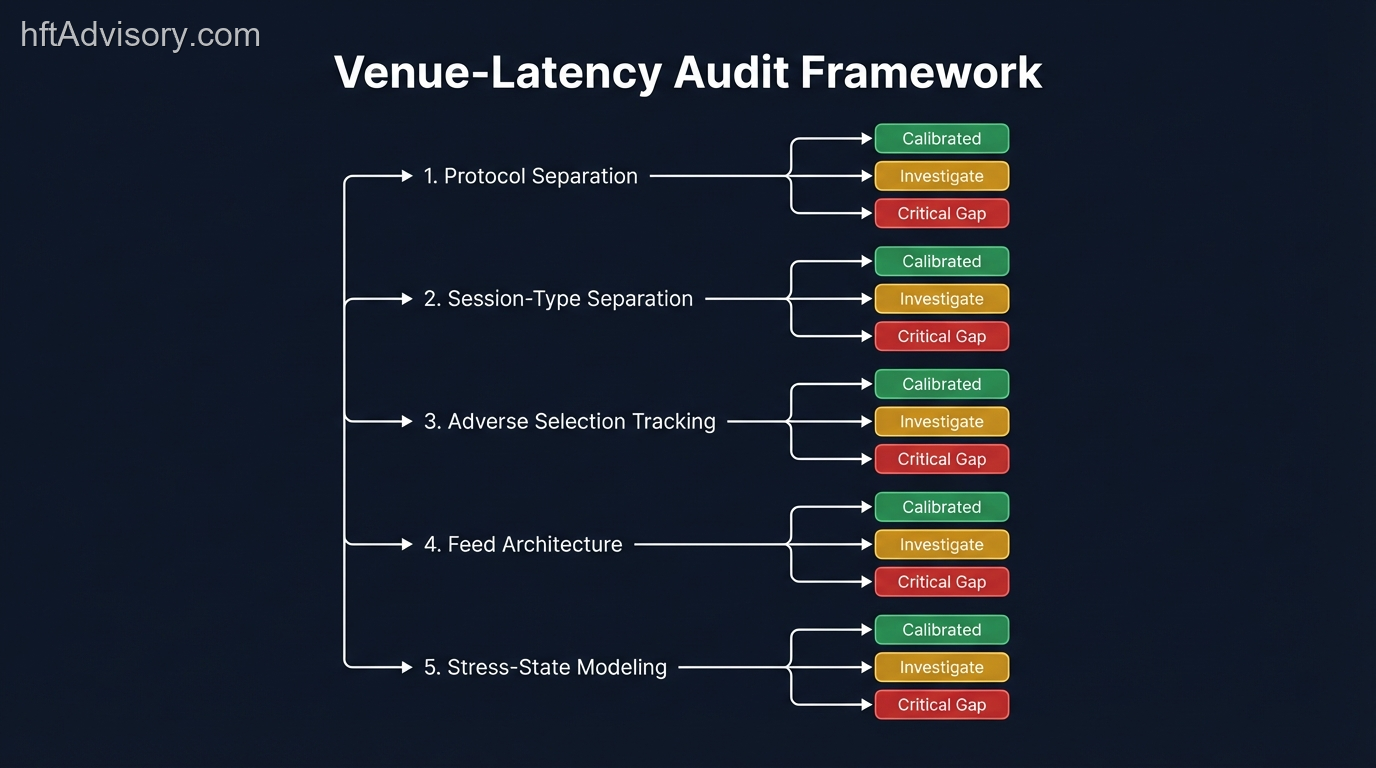

The following diagnostic framework is the “map.” The implementation — the actual remediation of each gap — is a separate engagement. The map, however, is worth having before the next quarterly review.

Dimension 1: Protocol-Level Separation

Does your latency monitoring distinguish between iLink 3 performance, ETI performance, and OUCH/ITCH performance as separate data series? If your monitoring aggregates across protocols, the answer is no. Each protocol has distinct parse-time characteristics and jitter profiles. Aggregate monitoring will not surface protocol-specific regressions.

Dimension 2: Session-Type Separation

For Eurex T7: are you tracking High Frequency session performance separately from Low Frequency and Low Frequency Back Office sessions? For CME: are you tracking CGW versus MSGW session performance separately? Session-type mixing in your latency metrics is equivalent to measuring morning rush-hour and 3am highway traffic in the same average.

Dimension 3: Instrument-Level Adverse Selection Tracking

Do you have per-instrument adverse selection metrics that are independent of your execution speed metrics? Adverse selection — the cost of orders that consistently fill at a price that immediately moves against you — is the financial output of latency failure. If you are not measuring it per instrument per venue, you are measuring the wrong thing and the P&L erosion is invisible until it accumulates.

Dimension 4: Feed Architecture Verification

For Nasdaq: have you verified which side of the A/B feed your market data pipeline is consuming? For CME: have you confirmed your market data recovery behavior under the iLink 3 session management protocol? Feed-level latency and recovery characteristics are upstream of order submission latency. Getting the order out fast on top of a slow or unreliable feed does not close the adverse selection gap.

Dimension 5: Stress-State Performance Modeling

Has your latency architecture been tested under the conditions that replicate flash event behavior — not just under normal load? The Aquilina et al. (2022) finding that 31% of price impact comes from latency arbitrage races means that the expected-state monitoring is measuring a minority of your exposure. The stress-state behavior — when matching engine queue depth spikes, when feed dissemination lags, when your colocation infrastructure sees resource contention — is where the capital destruction concentrates.

The Diagnostic Standard

Scoring this audit honestly produces one of three outcomes for each dimension:

- Calibrated: Separate, venue-specific metrics exist. Adverse selection is measured per instrument. Stress testing has been conducted in the past 6 months.

- Investigate: Metrics exist but are aggregated. Some venue-specific analysis occurs ad hoc, not systematically.

- Critical Gap: Monitoring is unified across venues. Adverse selection is inferred from P&L, not measured directly. No structured stress-state testing protocol exists.

A desk with three or more “Critical Gap” ratings across these five dimensions is operating with a latency profile that standard reporting will not fully expose until it shows up materially in quarterly P&L.

Conclusion: The Standard Has Already Been Set {#conclusion}

The empirical baseline is documented: 5 to 10 microseconds is the window in which alpha capture and adverse selection separate, and 31% of all price impact is attributable to the race that happens in that window. The matching engines are running — CME at sub-150 microseconds, Eurex T7 at approximately 60 microseconds for the HF session, Nasdaq at sub-40 microseconds with a 14-microsecond production floor. FPGA systems now achieve tick-to-trade latencies of 100 to 500 nanoseconds.

The infrastructure gap between the standard and most desks’ current monitoring architecture is not a technology problem. It is a diagnostic precision problem. The venues are not monolithic. The optimization is not generic. The loss is not market conditions.

The standard for venue-specific latency monitoring is: per-protocol, per-session, per-instrument adverse selection metrics, validated under stress conditions at each colocation site. If your current architecture cannot produce that report, the gap between what your dashboard shows and what your P&L experiences is worth auditing systematically — not next quarter, but before the next flash event.

If this audit surfaces three or more Critical Gaps — that is the architectural review conversation. A Discovery Assessment is the structured starting point. Details at electronictradinghub.com.

Originally shared as a LinkedIn post by Ariel Silahian. View original post

References: Aquilina, M., Budish, E., O’Neill, P. (2022). “Quantifying the High-Frequency Trading ‘Arms Race.'” Quarterly Journal of Economics, 137(1). | Kirilenko, A., Kyle, A., Samadi, M., Tuzun, T. (2017). “The Flash Crash: High-Frequency Trading in an Electronic Market.” Journal of Finance, 72(3). | CME Group iLink 3 Technical Specifications (2024). | Eurex T7 System Architecture Documentation (2024). | Nasdaq TotalView-ITCH Protocol Specification.

I help financial institutions architect high-frequency trading systems that are fast, stable, and profitable.

I have operated on both the Buy Side and Sell Side, spanning traditional asset classes and the fragmented, 24/7 world of Digital Assets.

I lead technical teams to optimize low-latency infrastructure and execution quality. I understand the friction between quantitative research and software engineering, and I know how to resolve it.

Core Competencies:

▬ Strategic Architecture: Aligning trading platforms with P&L objectives.

▬ Microstructure Analytics: Founder of VisualHFT; expert in L1/L2/LOB data visualization.

▬ System Governance: Establishing "Zero-Failover" protocols and compliant frameworks for regulated environments.

I am the author of the industry reference "C++ High Performance for Financial Systems".

Today, I advise leadership teams on how to turn their trading technology into a competitive advantage.

Key Expertise:

▬ Electronic Trading Architecture (Equities, FX, Derivatives, Crypto)

▬ Low Latency Strategy & C++ Optimization | .NET & C# ultra low latency environments.

▬ Execution Quality & Microstructure Analytics

If my profile fits what your team is working on, you can connect through the proper channel.