Table of Contents

- November 28, 2025: Aurora Data Center Goes Dark

- The Exposure Pattern: When Exchange State Disappears

- State Independence: The Architectural Separation

- The Reconciliation Engine: Three Critical Layers

- Knight Capital: $460M Lost in 45 Minutes

- The NASDAQ Facebook IPO: 38,000 Orders in Purgatory

- FIX Protocol ACOD: The Disconnect Defense

- IOSCO June 2024: Venue Outages Rising

- Diagnostic Checklist: 12 State Independence Questions

November 28, 2025: Aurora Data Center Goes Dark {#november-28-2025}

November 28, 2025. 10:00 AM Central Time. CME Group’s Aurora, Illinois data center lost cooling capacity. Within minutes, temperature sensors triggered automatic shutdown protocols across trading infrastructure. $600 billion in S&P 500 options positions froze mid-session. $60 billion daily FX flow stopped processing. The outage lasted 10-11 hours.

The cooling failure made headlines. The state management failure made P&L statements bleed.

In my 20+ years architecting trading systems — from tier-1 HFT desks to multi-asset hedge funds — I’ve watched this exact scenario destroy risk controls. The CME outage exposed a vulnerability I see on 60% of audits: trading firms treat exchange state as ground truth rather than maintaining independent reconciliation layers.

The Exposure Pattern: When Exchange State Disappears {#the-exposure-pattern}

Your Order Management System (OMS) transmits a $50M equity basket order at 09:45:23. The exchange acknowledges receipt. Your position tracking shows $50M long exposure. Risk limits adjust accordingly. Then: exchange feed death.

The Questions That Dictate P&L:

- Did the order fill before the outage?

- Did partial fills execute at 09:45:27 while confirmation messages sat in dead transmission queues?

- Did exchange-side logic cancel the order under disconnect protocols?

- Does your OMS believe you’re $50M long while the exchange reflects $0 exposure?

This gap — the window between “what your systems believe” and “what the exchange processed” — governs every decision during reconnection. A pattern I’ve observed across high-frequency shops: position reconciliation failures emerge within 90 seconds of unexpected disconnections. Average cost: $9.3M per hour of trading floor downtime according to 2024 Ponemon Institute infrastructure reliability data.

State Independence: The Architectural Separation {#state-independence}

State independence defines an architecture where your risk management logic calculates position, exposure, and limit utilization without requiring live exchange confirmation feeds.

Three foundational components:

1. Internal State Machine

Your OMS maintains its own order lifecycle tracking. Send Order → Pending Acknowledgment → Acknowledged → Partial Fill → Fully Filled. This state machine advances based on exchange messages during normal operations. During disconnection, it freezes at last-known state and flags uncertainty.

2. Reconciliation Layer

A dedicated process compares your internal state against exchange drop-copy feeds, clearing house reports, and post-trade settlement data. Gap detection runs continuously. When discrepancies emerge — your OMS shows 10,000 shares filled, exchange drop-copy reports 12,000 — the reconciliation engine escalates to manual review queues.

3. Conservative Fallback Logic

When exchange state becomes unavailable, risk controls default to maximum-possible-exposure assumptions. If the last confirmed state was “order acknowledged, awaiting fill,” your system assumes full fill occurred at worst-possible price until reconciliation proves otherwise.

The Reconciliation Engine: Three Critical Layers {#reconciliation-engine}

A diagnostic framework I provide to CTOs during infrastructure audits:

Layer 1: Pre-Trade Position Verification

Before transmitting any order, verify current position state against exchange pre-trade risk checks. If your OMS calculates 50,000 shares available buying power but exchange pre-trade response shows 48,000, reconciliation failure exists before order submission. This 2,000-share gap becomes a $40,000 problem when fills execute at $20/share against non-existent margin.

Layer 2: Intra-Day Reconciliation Checkpoints

Every 60 seconds, compare your position aggregation against exchange drop-copy feed summaries. Automated reconciliation engines scan for:

- Fill quantity mismatches (you believe 10,000 filled, exchange reports 10,200)

- Price discrepancies (your average fill $50.12, exchange average $50.09)

- Order status contradictions (your OMS shows “working,” exchange shows “cancelled”)

Flag any variance exceeding 0.1% of notional value for immediate investigation.

Layer 3: End-of-Day Settlement Cross-Check

After market close, cross-reference your internal position book against:

- Exchange clearing house settlement reports

- Prime broker position statements

- Custodian records

Discrepancies discovered at this layer cost money — you’ve spent the entire trading day making risk decisions based on incorrect position assumptions.

Failure Mode Awareness:

When did you last test reconciliation under simulated feed loss? Most firms discover gaps during actual outages — when discovering costs $9.3M/hour. The question CTOs should ask their teams: “If CME feeds die for 11 hours starting right now, which positions would we be uncertain about, and what’s our maximum possible exposure error?”

Knight Capital: $460M Lost in 45 Minutes {#knight-capital}

August 1, 2012. Knight Capital Group deployed new trading software. A configuration error reactivated dormant test code. That code — designed for simulation environments — began executing live market orders without proper state management controls.

The system generated 4 million trades across 154 stocks in 45 minutes. Knight Capital’s internal position tracking lost synchronization with actual exchange fills. When engineers finally killed the process, the firm discovered a $460 million loss. The company required emergency acquisition to survive.

The root cause: deployment processes failed to validate state management logic in production configurations. The software believed it was running simulation fills. The exchange processed real executions. No reconciliation layer caught the divergence until positions reached catastrophic levels.

The NASDAQ Facebook IPO: 38,000 Orders in Purgatory {#nasdaq-facebook}

May 18, 2012. Facebook’s IPO opened on NASDAQ at 11:30 AM — 30 minutes late. NASDAQ’s order matching engine encountered a bug in cross-order processing logic. 38,000 orders entered “pending” state — acknowledged by NASDAQ systems but not matched for execution.

Trading firms saw acknowledgments. They assumed fills were processing normally. Their OMSs advanced order states to “working.” Meanwhile, NASDAQ’s internal order book held these orders in unprocessed queues.

When NASDAQ finally resolved the technical issue, participants discovered massive order state discrepancies. Some firms believed they had zero exposure when they actually held millions of shares. Others calculated large positions that didn’t exist. NASDAQ ultimately paid $62 million in compensation for order execution and confirmations failures.

The architectural lesson: exchange acknowledgment of order receipt does not equal order processing completion. State independence dictates maintaining uncertainty markers for acknowledged-but-unconfirmed orders.

FIX Protocol ACOD: The Disconnect Defense {#fix-protocol-acod}

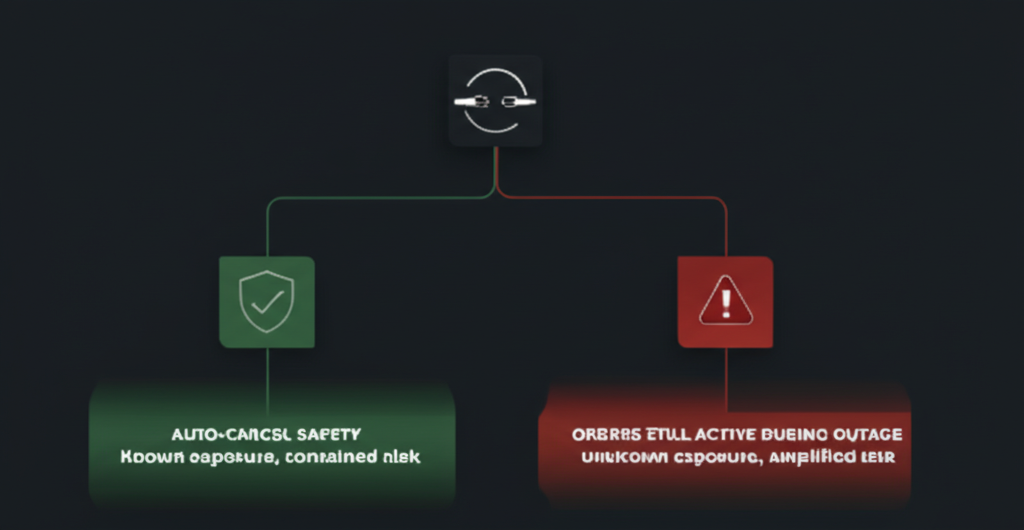

The FIX Protocol defines Auto Cancel on Disconnect (ACOD) mechanisms. When your trading connection to an exchange drops, ACOD logic automatically cancels all working orders associated with that session.

Purpose: prevent “ghost orders” — working orders in exchange order books when your systems have lost ability to monitor or cancel them.

ACOD configuration governs exposure during connection failures:

- ACOD Enabled: Exchange cancels all working orders on disconnect. Your maximum exposure = already-filled positions.

- ACOD Disabled: Exchange maintains working orders. Your maximum exposure = already-filled + all working orders if they execute at worst-possible prices during reconnection window.

During the CME November 28 outage, ACOD settings determined which firms faced “known exposure” versus “potential exposure plus all working order risk.” A configuration choice made months earlier dictated P&L vulnerability during 11 hours of market data blindness.

IOSCO June 2024: Venue Outages Rising {#iosco-report}

International Organization of Securities Commissions (IOSCO) published June 2024 analysis of exchange outages across 47 jurisdictions. Key findings:

- Venue outages increased 23% year-over-year (2022-2023)

- Average outage duration: 2.4 hours

- 31% of outages resulted in position reconciliation failures for market participants

- Median financial impact per participant: $2.1M per outage event

The report emphasized state management resilience as critical infrastructure requirement. Regulatory focus shifts from “prevent all outages” (impossible) to “maintain position certainty during outages” (architecturally achievable).

Diagnostic Checklist: 12 State Independence Questions {#diagnostic-checklist}

Questions CTOs should ask their Head of Trading Technology to assess state management architecture:

Visibility Questions:

1. If our primary market data feed dies right now, which positions would we be uncertain about within 60 seconds?

2. What’s our maximum possible exposure error if reconciliation fails for 4 hours?

3. Do we maintain independent position tracking that doesn’t require live exchange confirmations?

Reconciliation Questions:

4. How frequently do we compare our position book against exchange drop-copy feeds?

5. What variance threshold triggers automated reconciliation alerts?

6. When did we last test reconciliation processes under simulated feed loss?

Fallback Questions:

7. What does our risk system assume about order state when exchange feeds disappear?

8. Do we default to maximum-exposure assumptions during uncertainty windows?

9. Are our conservative fallback positions calibrated to actual worst-case fill scenarios?

Configuration Questions:

10. Which exchanges have ACOD enabled on our trading connections?

11. Did we consciously choose those ACOD settings based on exposure analysis, or inherit them from default configurations?

12. What exposure gap would we face if a CME-magnitude outage hit our largest trading venue right now?

Answering these questions reveals whether your architecture maintains position certainty when exchange state becomes unavailable — or whether you’re flying blind the moment feeds die.

Source Material

This analysis draws from publicly available information about the November 28, 2025 CME Group outage, historical incidents including Knight Capital (August 2012) and NASDAQ Facebook IPO (May 2012), IOSCO June 2024 venue resilience report, and architectural patterns observed across 20+ years architecting trading infrastructure for tier-1 HFT firms and multi-asset hedge funds.

I’m the creator of VisualHFT, an open-source market microstructure analysis platform with 1,000+ GitHub stars, and author of two published books on electronic trading systems.

When trading venues experience infrastructure failures — cooling systems, network partitions, software bugs — the firms that maintain state independence face known exposure windows. The firms that treat exchange state as ground truth face unknown exposure amplification.

If your current architecture cannot answer the 12 diagnostic questions above with precision, we should review your state management design.

Ready to assess your infrastructure’s resilience to venue outages? Schedule a Discovery Assessment at hftadvisory.com

Originally shared as a LinkedIn post