Table of Contents

1. The $1.5 Trillion Settlement Asymmetry

2. Why T+1 Still Isn’t Fast Enough

3. Scenario 1: The Capital Efficiency Gap (24 Hours vs 5 Minutes)

4. Scenario 2: The Protocol Translation Bottleneck

5. Scenario 3: The Uptime Requirement Mismatch

6. The 3 AM Volatility Problem: When Legacy Operations Fail

7. Deterministic Failover: Architecting for Autonomous Recovery

8. The Rebuild Decision: Architecture Assessment Framework

The $1.5 Trillion Settlement Asymmetry

JP Morgan Kinexys has processed over $1.5 trillion in tokenized transactions since its inception as Onyx Digital Assets in 2020, with current daily volumes exceeding $5 billion. The platform achieves settlement finality in minutes. Meanwhile, even after the May 28, 2024 transition to T+1 settlement, traditional clearing houses still trap capital for 24 hours in settlement cycles.

The gap is operational, and it’s measured in trapped liquidity.

I’ve spent two decades architecting high-frequency trading systems across traditional and digital asset markets. The infrastructure asymmetry we’re observing in 2026 is the most significant competitive discontinuity I’ve witnessed since the transition from floor trading to electronic execution. This is what happens when systems designed for batch processing meet markets that never sleep.

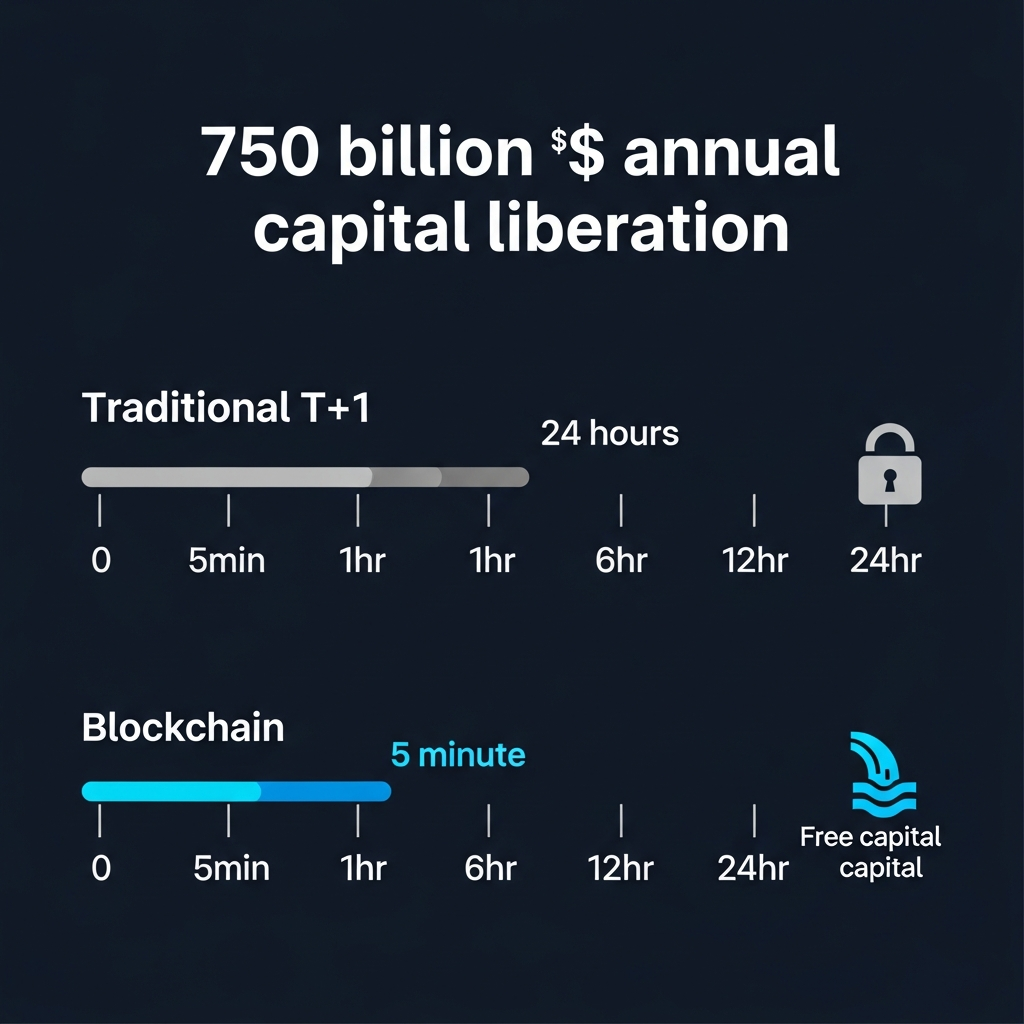

Why T+1 Still Isn’t Fast Enough

The May 28, 2024 transition from T+2 to T+1 settlement was celebrated as a major advancement. According to SIFMA, the change freed approximately $750 billion in trapped capital annually across U.S. equity and bond markets. The operational efficiency gains were real.

But 24 hours of settlement latency is still an eternity when your competitors achieve finality in 5 minutes.

Consider the capital efficiency calculation: A traditional desk trading $1 billion daily in equities has $1 billion locked in settlement at any given time under T+1. That same desk operating on blockchain rails with 5-minute settlement has, on average, $3.5 million in settlement float—a 99.65% reduction in trapped capital.

The ROE implications are straightforward. When your capital is trapped for 24 hours while competitors turn the same capital 288 times per day, you’re fighting a mathematical losing battle.

Traditional infrastructure was architected for business hours because markets closed. Batch processing ran overnight because there were no trades to process. System maintenance windows existed because uptime requirements aligned with market hours. Risk teams worked 9-to-5 because there was no risk to monitor after 4 PM.

Digital asset markets don’t close. There are no maintenance windows. No circuit breakers at 4 PM. Volatility events strike at 3 AM on Sunday when your compliance team is offline and your batch settlement jobs are mid-cycle.

Scenario 1: The Capital Efficiency Gap (24 Hours vs 5 Minutes)

Capital sits in clearing accounts for 24 hours under T+1 while blockchain competitors achieve settlement finality in under 5 minutes. For context, Ethereum mainnet achieves finality in 12-15 minutes, while Layer 2 solutions like Polygon deliver 2.1-second settlement.

ROE degradation isn’t a risk model problem. It’s an architecture problem.

A $500 million AUM fund operating on traditional rails with T+1 settlement maintains, on average, $500 million in settlement float. The opportunity cost of that trapped capital—at even a conservative 5% annual return—is $25 million annually in unrealized gains.

The same fund operating on blockchain settlement rails with 5-minute finality maintains $1.74 million in average settlement float. The capital efficiency gain is $498.26 million in freed liquidity that can be deployed for alpha generation.

In 20+ years of architecting trading systems, I’ve observed that the firms treating settlement latency as an infrastructure constraint rather than an unavoidable cost are the ones building competitive moats. The desk that can turn capital 288 times per day while competitors turn it once has a structural advantage no amount of alpha research can overcome.

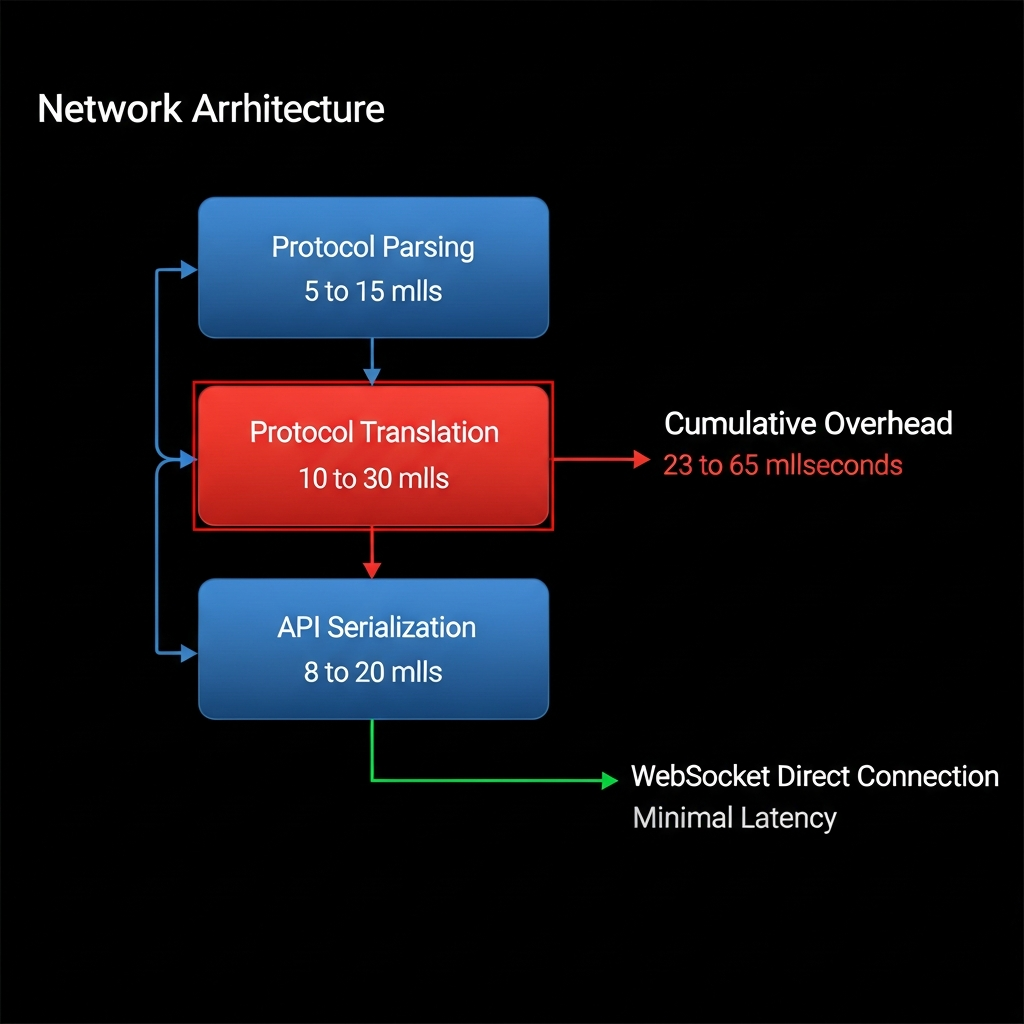

Scenario 2: The Protocol Translation Bottleneck (FIX vs REST/WebSocket)

Legacy systems speak proprietary FIX dialects through VPNs. Digital asset venues demand RESTful APIs and WebSocket feeds. The translation layer becomes the bottleneck, and arbitrage windows close before orders route.

The FIX Protocol (Financial Information eXchange) has been the standard for equity order routing since 1992. Over 300 banks and exchanges use FIX 5.0 SP2 for equities. The protocol was designed for reliable message delivery in a world where 100-millisecond latency was acceptable.

Crypto venues speak REST/WebSocket because they were built in a post-cloud era where HTTP-based APIs are the native language of the internet. The architectural impedance mismatch is profound.

BlockFills, an institutional crypto liquidity aggregator, built their entire value proposition around solving this connectivity problem. Their platform translates FIX messages from traditional order management systems into REST/WebSocket calls for crypto exchanges, providing sub-50-millisecond translation latency.

But here’s what the BlockFills solution reveals: If your competitive advantage requires a third-party vendor to translate between your internal systems and the markets you trade, your architecture is fundamentally misaligned with the opportunity.

A pattern I’ve seen repeatedly across high-frequency desks is the “adapter layer illusion”—the belief that a sufficiently fast translation layer can bridge architectural eras. In practice, the adapter becomes the constraint. When milliseconds matter, every protocol hop is a tax on execution quality.

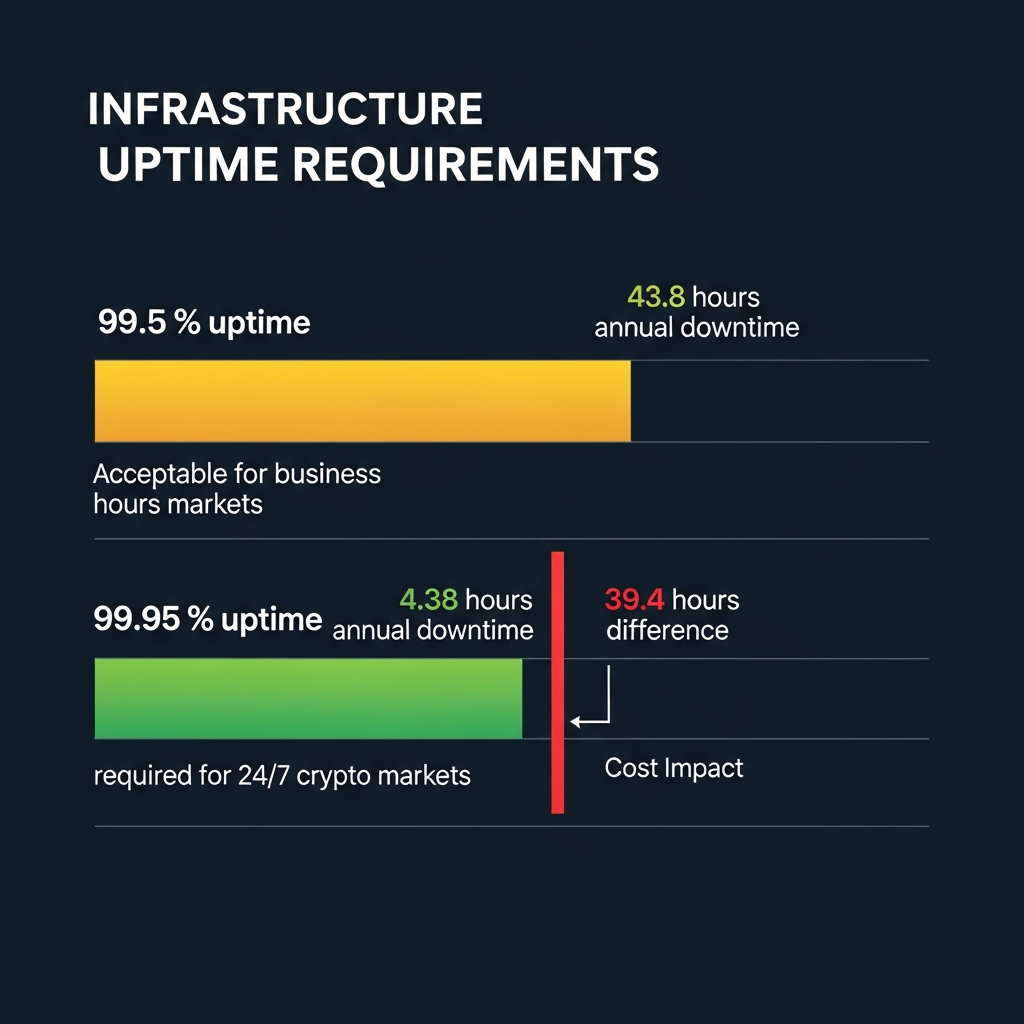

Scenario 3: The Uptime Requirement Mismatch (99.5% vs 99.95%)

A system designed for 99.5% uptime was acceptable when markets closed. That uptime target allows 43.8 hours of annual downtime—perfectly reasonable when 128 hours per year are scheduled downtime for market closures.

In an environment requiring 99.95%+ continuous availability, 99.5% uptime fails catastrophically. The 0.45% gap translates to 39.4 hours of annual unscheduled downtime. When competitors never stop and your systems are offline for the equivalent of a full work week annually, the P&L impact is measurable.

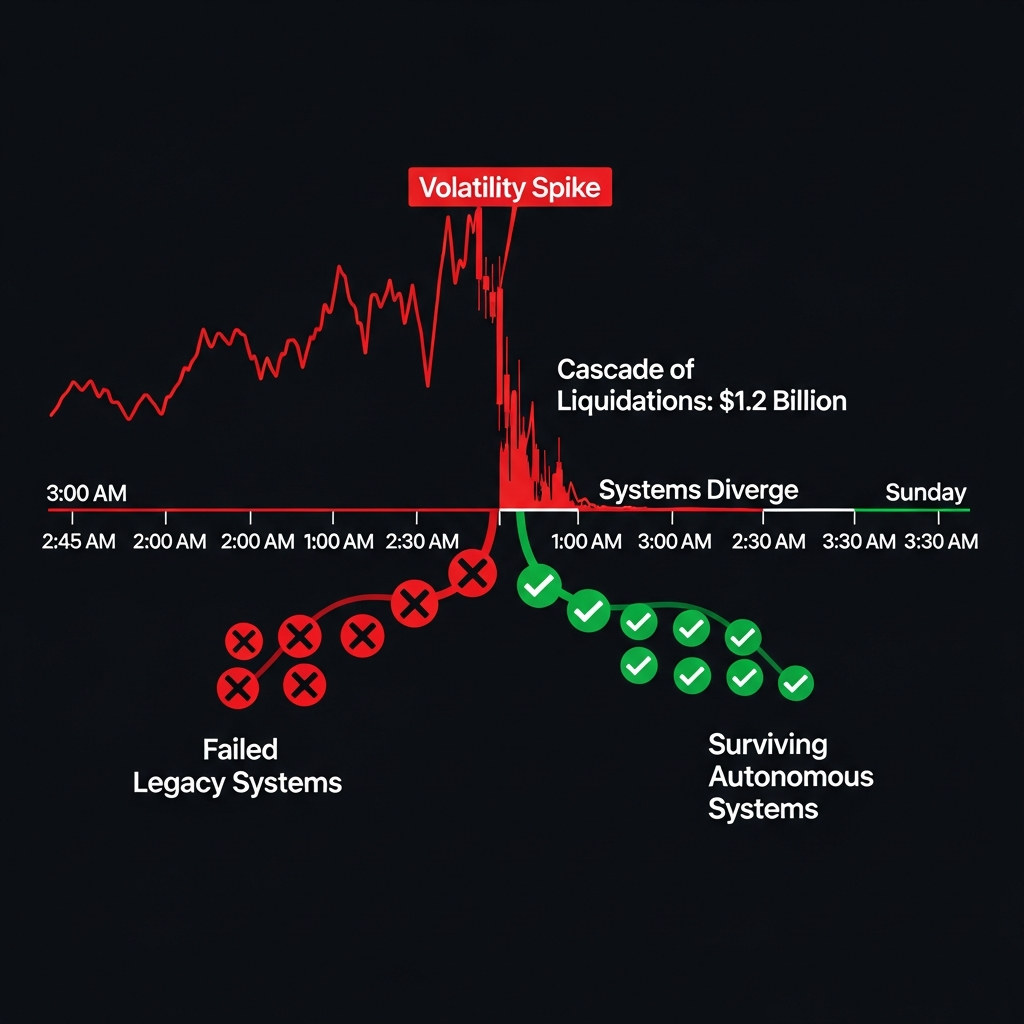

The February 3, 2025 Ethereum flash crash demonstrates the stakes. At 3 AM on a Sunday morning, $1.2 billion in liquidations occurred across major exchanges including Coinbase and Kraken. Traditional trading desks with operations teams offline and maintenance windows scheduled had no mechanism to respond.

The firms that survived that event had deterministic failover architectures that operated autonomously. The firms that took losses were running systems designed for business hours.

Uptime requirements drive architectural decisions in ways that aren’t obvious until failure occurs. A 99.5% uptime system can tolerate single points of failure with manual recovery procedures. A 99.95% system requires active-active redundancy, automated failover, and zero operator dependency for recovery.

The 3 AM Volatility Problem: When Legacy Operations Fail

Volatility events don’t wait for business hours. The February 3, 2025 Ethereum flash crash occurred at 3 AM on a Sunday. $1.2 billion in liquidations processed in a 45-minute window. Operations teams were offline. Batch settlement jobs were mid-cycle. Manual intervention protocols were unavailable.

The firms that survived had systems that didn’t require human operators to make decisions during volatility events. The firms that took losses were running architectures designed for 9-to-5 operations.

This is where theoretical architecture debates become P&L reality. When a $100 million position is liquidating at 3 AM and your risk management system is waiting for an operator to acknowledge an alert, you’re not trading—you’re gambling on human availability.

I’ve learned this pattern through direct observation across multiple volatility events: The difference between desks that protect capital and desks that bleed capital during off-hours volatility is deterministic failover. The system either knows what to do without human intervention, or it waits for an operator who might not be available for 20 minutes.

Twenty minutes during a flash crash is the difference between a managed exit and a forced liquidation at the worst possible price.

Deterministic Failover: Architecting for Autonomous Recovery

Deterministic failover means the system knows exactly what to do when the primary execution path fails, and it executes that response without operator intervention. There are no “alert and wait” procedures. No escalation protocols. No on-call engineers making judgment calls.

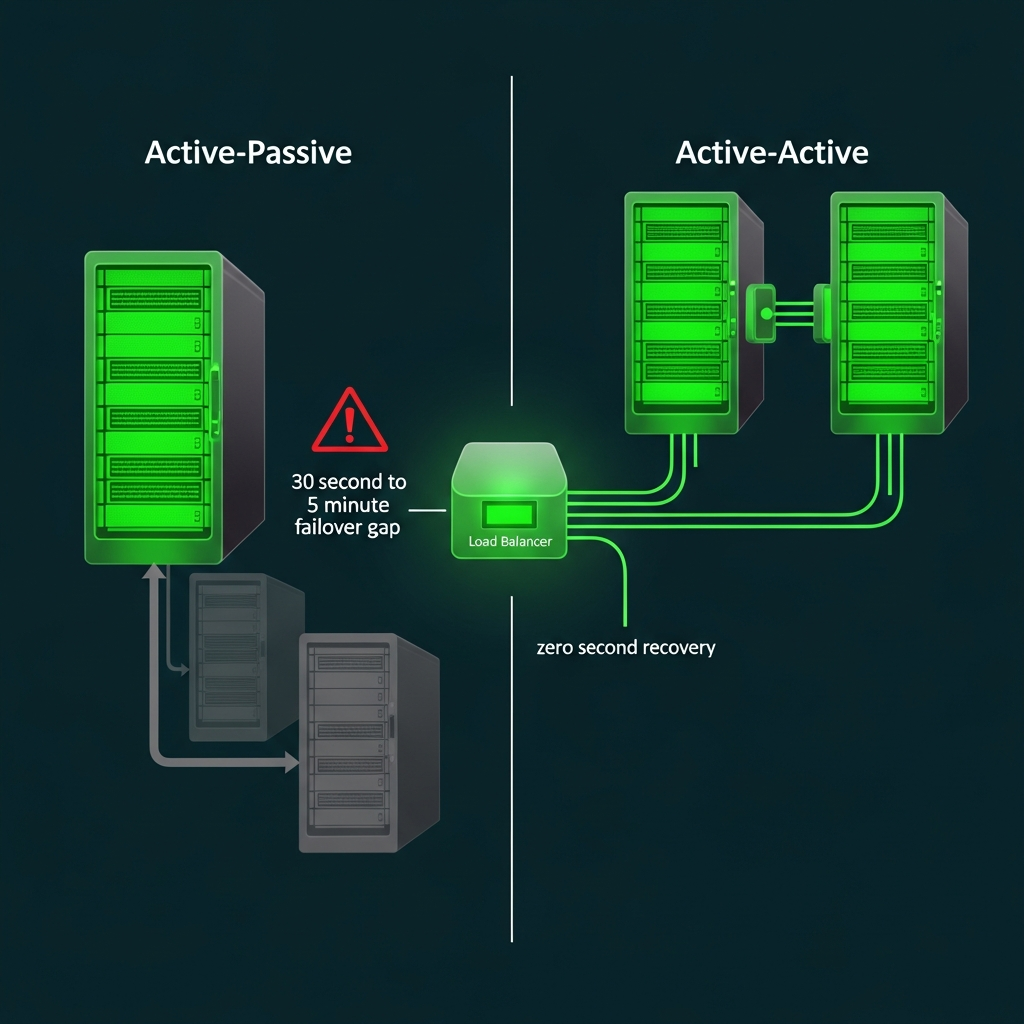

The architecture decision comes down to active-active vs active-passive failover strategies:

Active-Passive Failover (Traditional Architecture):

- Primary system handles all execution

- Passive backup system monitors primary health

- On failure detection, backup system activates

- Requires coordination protocol to prevent split-brain scenarios

- Recovery time: 30 seconds to 5 minutes depending on health check intervals

- Single point of failure during switchover window

Active-Active Failover (24/7 Architecture):

- Multiple systems handle execution simultaneously

- Load balancing distributes orders across active systems

- Failed system is automatically removed from rotation

- No coordination protocol needed—surviving systems continue

- Recovery time: Immediate (next order routes to healthy system)

- No single point of failure

The cost difference is significant. Active-active architectures require 2-3x infrastructure investment compared to active-passive designs. But when a 30-second failover window during a volatility event costs $5 million in forced liquidations, the ROI calculation is straightforward.

A framework I use when evaluating infrastructure readiness for 24/7 operations:

Question 1: If your primary execution venue becomes unavailable at 3 AM on Sunday, how long until orders route to secondary venues?

- If the answer is “when the on-call engineer acknowledges the alert,” your architecture is operator-dependent.

- If the answer is “the next order automatically routes to the secondary venue,” your architecture is deterministic.

Question 2: What happens to open positions when your risk management system detects anomalous behavior during off-hours?

- If the answer is “alert the risk team,” you’re gambling on human availability.

- If the answer is “automatically flatten positions according to pre-defined protocols,” you have autonomous risk management.

Question 3: Can your settlement layer continue processing when your primary blockchain node goes offline?

- If the answer is “we’d need to restart the node,” you have a single point of failure.

- If the answer is “settlement continues through backup nodes without interruption,” you have redundancy.

The pattern I’ve observed across successful 24/7 trading operations is straightforward: They architect for the scenario where no human operator is available to make decisions. The system either knows what to do, or the system is incomplete.

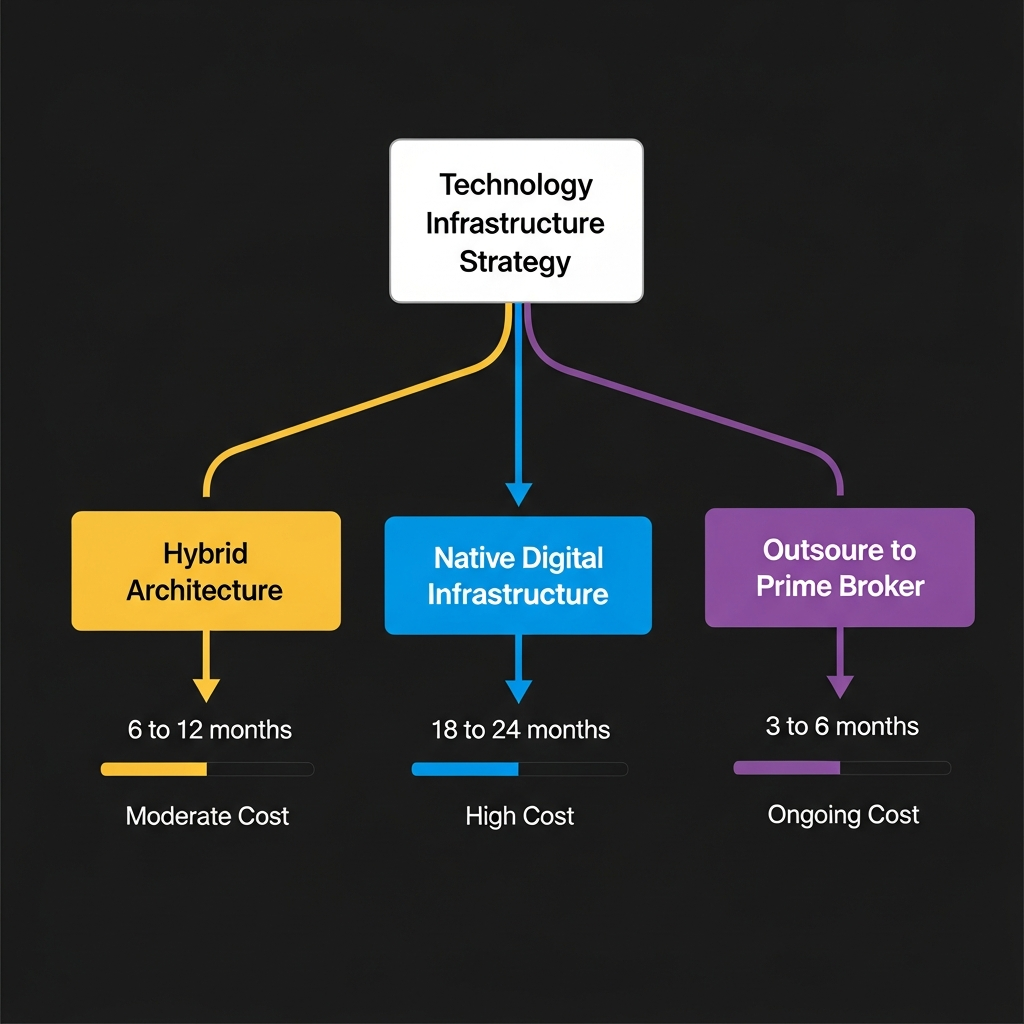

The Rebuild Decision: Architecture Assessment Framework

2026 marks the institutional era for digital assets. The technology matured years ago. What changed is that the legacy infrastructure gap became a competitive liability measured in basis points and trapped capital.

If you’re architecting for 24/7 digital asset operations while maintaining T+1 settlement rails, your technology stack is fighting your business model.

The firms solving this are rebuilding the settlement layer. Here’s a practical framework for assessing whether you’re in the “optimize existing architecture” camp or the “rebuild from scratch” camp:

Infrastructure Readiness Checklist:

Settlement Layer:

- [ ] Does your settlement layer support sub-5-minute finality?

- [ ] Can you operate with 99.65% less trapped capital than current T+1 processes?

- [ ] Is settlement processing completely automated without batch windows?

Connectivity Layer:

- [ ] Can your order routing translate FIX to REST/WebSocket in <50ms?

- [ ] Do you maintain native WebSocket connections to digital asset venues?

- [ ] Is protocol translation happening at the edge (venue adapters) vs the core (central OMS)?

Operational Layer:

- [ ] Does your system operate with 99.95%+ uptime without operator intervention?

- [ ] Can your risk management system flatten positions autonomously during off-hours volatility?

- [ ] Is your failover architecture active-active vs active-passive?

Capital Efficiency:

- [ ] Can you quantify the opportunity cost of capital trapped in settlement?

- [ ] Have you calculated ROE impact of 24-hour settlement cycles vs 5-minute finality?

- [ ] Does your capital allocation model assume 24/7 liquidity availability?

If you answered “no” to more than half of these questions, you’re running a business-hours architecture in a 24/7 market. The adapter layer strategy might buy you 12-18 months, but the rebuild conversation is inevitable.

If you answered “no” to more than three-quarters of these questions, the rebuild should start now. Every quarter you delay is a quarter of competitive disadvantage accumulation.

The Path Forward

The infrastructure gap between traditional financial systems and digital asset markets is no longer theoretical. It’s quantifiable: $750B in annual trapped capital from T+1 settlement vs blockchain’s sub-5-minute finality. It’s operational: 99.5% uptime designed for business hours vs 99.95% requirements for 24/7 markets. It’s architectural: FIX Protocol translation layers adding 23-65ms latency to every order.

The question for CTOs at traditional institutions isn’t whether to address this gap. The question is whether to rebuild incrementally or fundamentally.

I’ve architected trading systems through three infrastructure eras—floor trading to electronic execution, market data standardization to direct exchange feeds, and now traditional settlement to blockchain finality. The pattern is consistent: The firms that recognize architectural discontinuities early and rebuild proactively build decade-long competitive advantages. The firms that optimize incrementally wake up five years later wondering why their competitors’ cost structures are 40% lower.

The settlement layer is the new battleground. The desks that solve deterministic failover for 24/7 operations while traditional competitors still design for business hours will capture the institutional digital asset opportunity.

What is the best way to manage deterministic failover when your primary execution path fails at 3 AM and your operations team is unavailable? If you’re still designing alert-and-escalate protocols instead of autonomous recovery systems, we should review your architecture roadmap.

This article was originally shared as a LinkedIn post. For infrastructure assessments and 24/7 architecture reviews, visit hftadvisory.com.